One of my customer asked how to package PySpark application in one file with PyInstaller, after some research, I got the answer and share it here.

PyInstaller freezes (packages) Python applications into stand-alone executables, under Windows, GNU/Linux, Mac OS X, FreeBSD, Solaris and AIX.

Environment

- OS:MacOS Mojave 10.14.5

- Python :Anaconda 2019.03 for macOS

- Spark : spark-2.4.3-bin-hadoop2.7

- PostgreSQL: 11.2

- PostgreSQL JDBC: 42.2.5

- UPX: brew install upx

code is from PySpark Read/Write PostgreSQL

from future import print_function

import findspark

findspark.init(spark_home="spark")

from pyspark.sql import SparkSession

from pyspark import SparkConf,SparkContext

spark = SparkSession.builder\

.master("local[*]")\

.appName('jdbc PG')\

.getOrCreate()

df = spark.read.csv(path = '/Users/steven/Desktop/hengshu/data/iris.csv', header = True,inferSchema = True)

df=df.toDF(*(c.replace('.', '_').lower() for c in df.columns))

db_host = "127.0.0.1"

db_port = 5432

table_name = "iris"

db_name = "steven"

db_url = "jdbc:postgresql://{}:{}/{}".format(db_host, db_port, db_name)

options = {

"url": db_url,

"dbtable": table_name,

"user": "steven",

"password": "password",

"driver": "org.postgresql.Driver",

"numPartitions": 10,

}

options['dbtable']="iris"

df.write.format('jdbc').options(**options).mode("overwrite").save()

df1=spark.read.format('jdbc').options(**options).load()

df1.count()

df1.printSchema()

spark.stop()

After compile with the following code, we can get an app with 297M and named pyspark_pg.

pyinstaller pyspark_pg.py \ --onefile \ --hidden-import=py4j.java_collections\ --add-data /Users/steven/spark/spark-2.4.3-bin-hadoop2.7:pyspark\ --add-data /Users/steven/spark/jars/postgresql-42.2.5.jar:pyspark/jars/

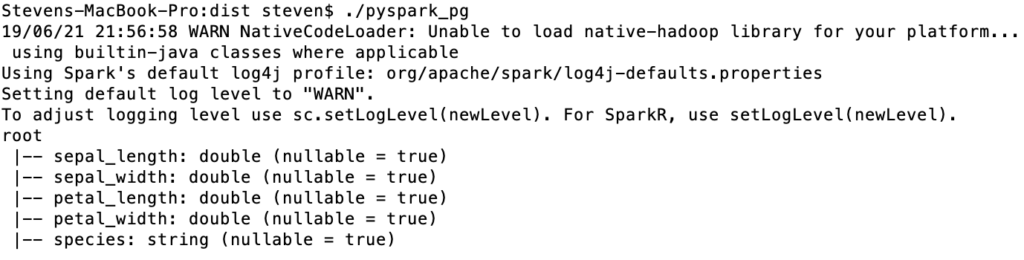

Let’s run it, ./pyspark_pg

btw, this app does not include JDK, but Python3.7 and Spark-2.4.3-bin-hadoop2.7

For Chinese version, please visit here.

Hi,

Thank you. The python program is running finw without pyinstaller. However, when i run the shell script built with pyinstaller, i am getting ModuleNotFoundError: No module named ‘py4j.java_collections’ error.

Any hints or help ?

Thank you

findspark.init(spark_home=”spark”)

This should be:

findspark.init(spark_home=”pyspark”)

right?